Trustworthy Machine Learning

Oct 14, 2022

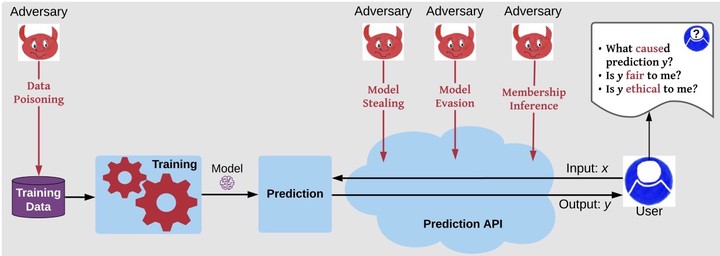

We study robustness (to training data poisoning, model evasion, model stealing), privacy (against training example membership inference), and the interaction among robustness, privacy, transparency, and fairness properties in machine learning.

Birhanu Eshete

Principal Investigator

trustworthy machine learning, cybercrime analysis, and cyber threat intelligence.

Publications

DeResistor: Toward Detection-Resistant Probing for Evasion of Internet Censorship

The arms race between Internet freedom advocates and censors has catalyzed the emergence of sophisticated blocking techniques and …

MIAShield: Defending Membership Inference Attacks via Preemptive Exclusion of Members

In membership inference attacks (MIAs), an adversary observes the predictions of a model to determine whether a sample is part of the …

Adversarial Detection of Censorship Measurements

The arms race between Internet freedom technologists and censoring regimes has catalyzed the deployment of more sophisticated censoring …

DP-UTIL: Comprehensive Utility Analysis of Differential Privacy in Machine Learning

Differential Privacy (DP) has emerged as a rigorous formalism to reason about quantifiable privacy leakage. In machine learning (ML), …

EG-Booster: Explanation-Guided Booster of ML Evasion Attacks

The widespread usage of machine learning (ML) in a myriad of domains has raised questions about its trustworthiness in securitycritical …

Morphence: Moving Target Defense Against Adversarial Examples

Robustness to adversarial examples of machine learning models remains an open topic of research. Attacks often succeed by repeatedly …

Making Machine Learning Trustworthy

Machine learning (ML) has advanced dramatically during the past decade and continues to achieve impressive human-level performance on …

Explanation-Guided Diagnosis of Machine Learning Evasion Attacks

Machine Learning (ML) models are susceptible to evasion attacks. Evasion accuracy is typically assessed using aggregate evasion rate, …

PRICURE: Privacy-Preserving Collaborative Inference in a Multi-Party Setting

When multiple parties that deal with private data aim for a collaborative prediction task such as medical image classification, they …

PRICURE: Privacy-Preserving Collaborative Inference in a Multi-Party Setting

When multiple parties that deal with private data aim for a collaborative prediction task such as medical image classification, they …

Best-Effort Adversarial Approximation of Black-Box Malware Classifiers

An adversary who aims to steal a black-box model repeatedly queries the model via a prediction API to learn a function that …