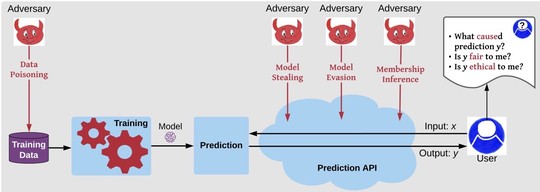

In DSPLab, our research focus is on trustworthy machine learning, cybercrime analysis, and cyber threat intelligence. Find us on GitHub for tools/datasets and on Twitter for latest news.

Previous

Next

Previous

Next

View from DSPLab Window

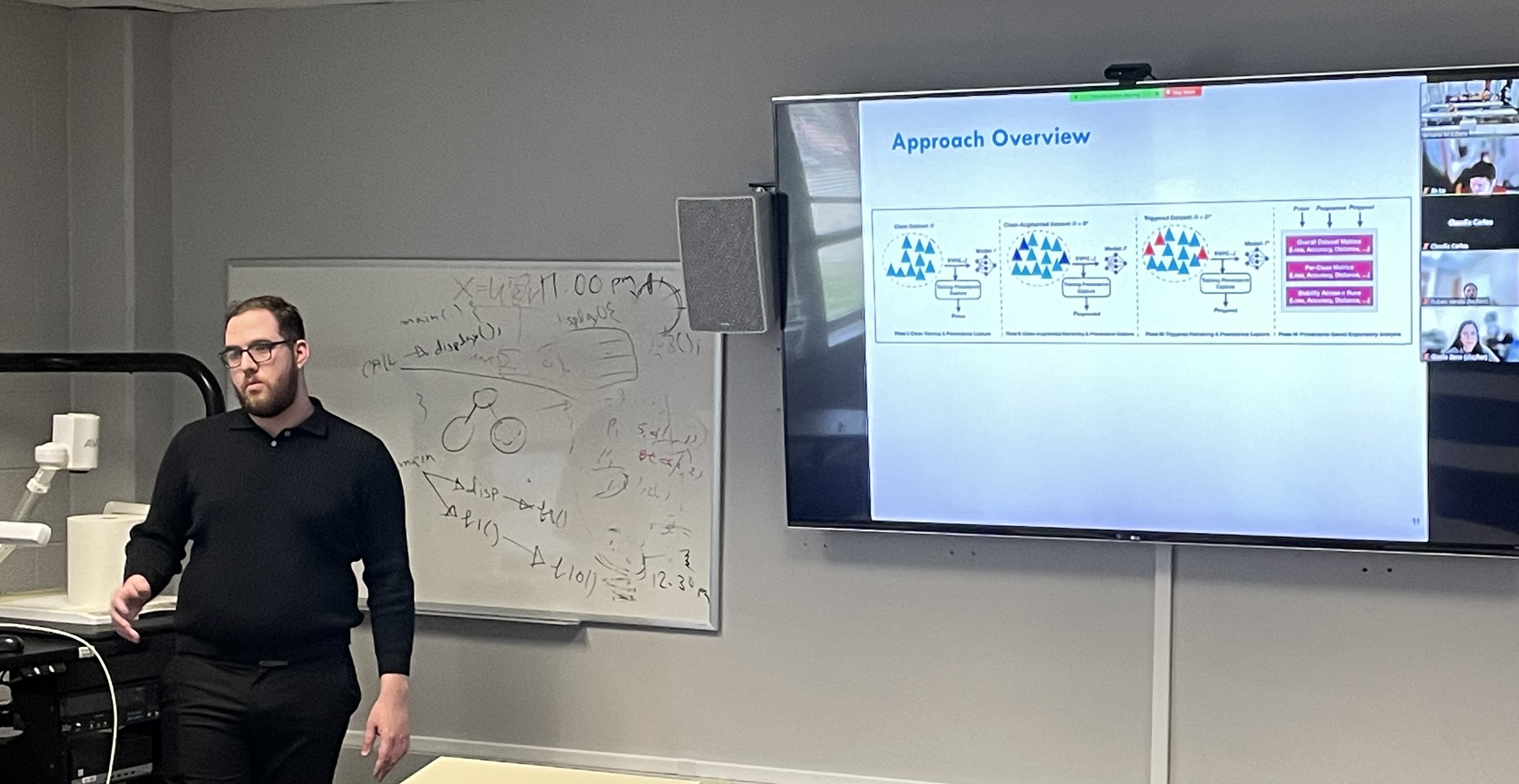

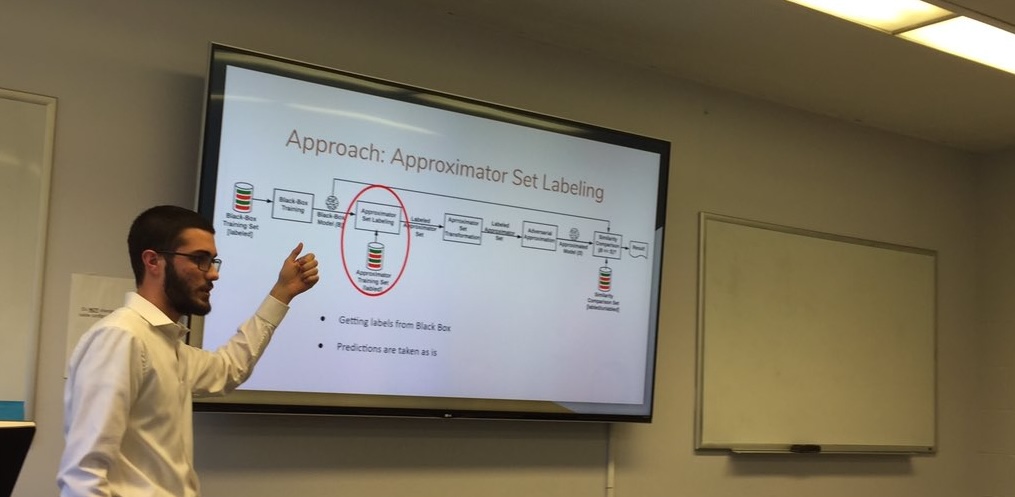

Abe Amich Presenting a Paper at USENIX Security

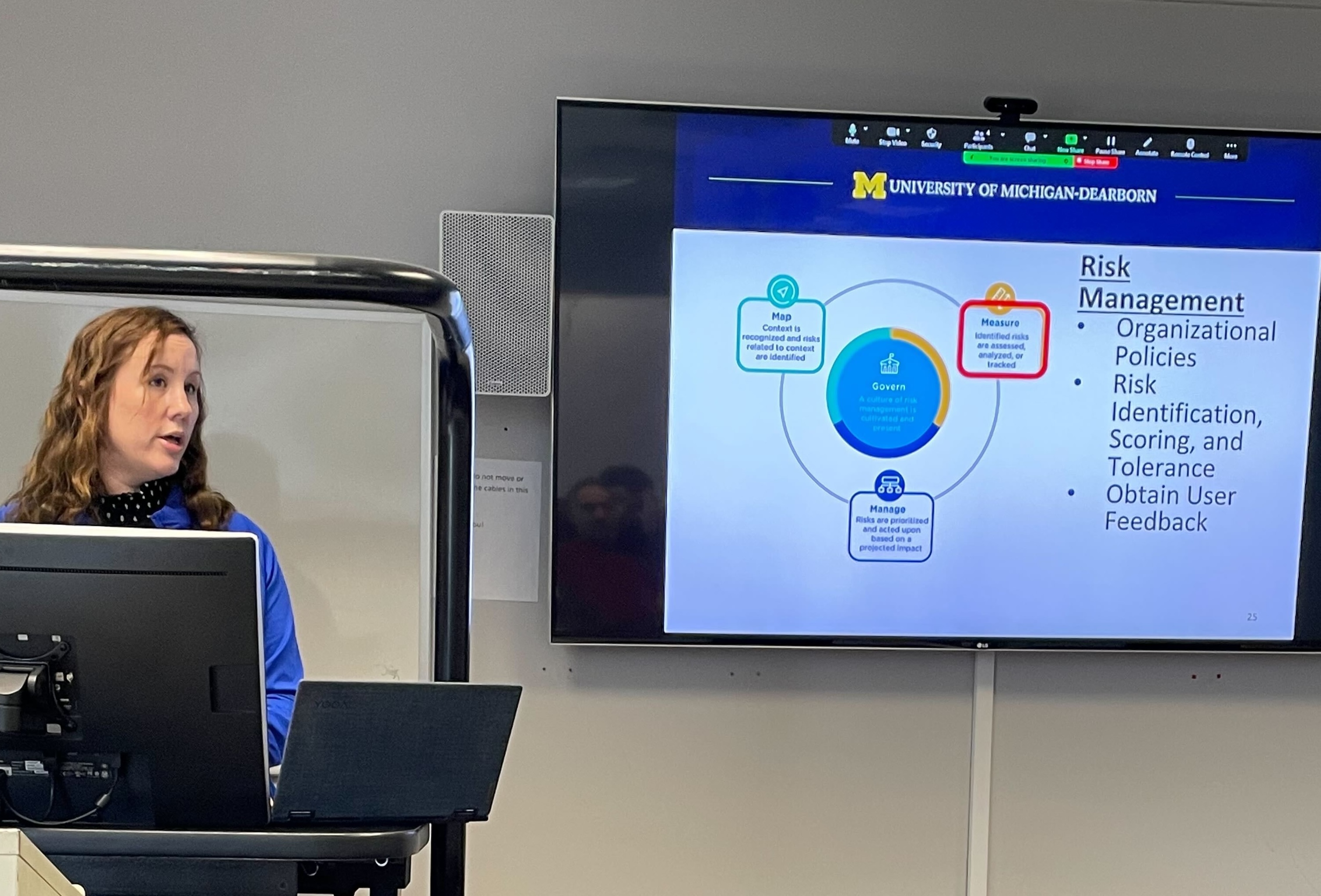

Abdullah Ali's Thesis Defense